This week we’re not mentioning Arsene Wenger leaving Arsenal as it isn’t really a media, marketing or PR story (though it will be when the Arsenal brand inevitably gets scrutinised after their manager of 22 years departs), but we are covering Wetherspoons leaving social media, new readership stats proving tricky, the FT’s new opinions, the MP gender gap and Facebook’s attempt to comply with the GDPR.

1. Wetherspoons calls last orders on social media

JD Wetherspoons has removed all its corporate accounts from Twitter, Facebook and Instagram, in a shock announcement that has surprised the marketing world. In a statement, Wetherspoons said: ‘Rather than using social media, we will continue to release news stories and information about forthcoming events on our website (jdwetherspoon.com) and in our printed magazine – Wetherspoon News.’

JD Wetherspoons has removed all its corporate accounts from Twitter, Facebook and Instagram, in a shock announcement that has surprised the marketing world. In a statement, Wetherspoons said: ‘Rather than using social media, we will continue to release news stories and information about forthcoming events on our website (jdwetherspoon.com) and in our printed magazine – Wetherspoon News.’

Rumours of the real reasons behind the social departure range from it has links with the Cambridge Analytica scandal, to it’s just a publicity stunt, but as Marketing Week has pointed out, the real reason might be because it’s just not working for the brand. Marketing Week even goes as far as to suggest social media is designed for people not brands – and that ‘people connecting with brands organically on social media was BS from the beginning’. Let that sink in.

With only 44K followers on Twitter and 100K on Facebook, the channels probably weren’t very useful for Wetherspoons, definitely at least not how they were being used. While many brands re-evaluate their strategy when it is not working, Wetherspoons has decided to focus its marketing efforts elsewhere. Like, if you think they’ve made the right choice, or retweet if you think it’s wrong.

Incidentally, this is being called Wetherspoons’ greatest ever social media interaction, and in no way points to the reason the brand came off the platforms:

2. The Sun rises on new readership statistics

Two news stats were published this week. The newly formed Published Audience Measurement Company (Pamco), which has replaced the National Readership Survey (NRS), released overall readership numbers and ABC published the latest circulation stats.

Pamco describes itself as using ‘world leading methodology’, based on 35,000 face-to-face interviews for print readership and demographic data, and a digital panel of 5,000 participants for online stats. The stats are, in places, surprising and, just as Pamco points out with the failings of the NRS, it is hard to determine how accurate they truly are. The numbers reveal that The Sun has the largest overall readership across print and digital, with 33.3m monthly readers, as well as the largest reach on mobile; the Mail has the largest reach on tablet; and the Guardian has the largest reach on desktop. For print, the Metro has the greatest reach, with 10.5m readership, but with paid-for titles it’s The National, with 10m (a paper with less than 10,000 copies distributed in Scotland is apparently read by twice the population of Scotland).

The stats don’t make it clear how much digital readership each brand has, as there appears to be duplications across the different devices. Even breakdowns within devices provide inconsistencies, for example – The Sun’s total Mobile reach is 26.5m but then it breaks that number down into phone and tablet, but together that breakdown adds up to 29m. There’s also some question over total reach, with Pamco suggesting 46m people read news brands each month – but combined with other stats, that suggests over 70% of news enthusiasts read The Sun.

The other stats are easier the follow; the ABC data for March reveals The Sun has regained its title of most circulated paper, after the Metro last month knocked it off its perch. All papers lost readers in March, the biggest drop was a 21% fall for the Sunday Mirror, while the smallest was a 0.11% fall for City AM.

3. FT changes comments to opinions

The Financial Times has published a new guide to make it easier for people to submit opinion pieces for possible publication. They take submissions that are up to 800 words, have personal (informed) perspectives and are unpublished elsewhere. As part of the new guide, the FT has also changed the name of its ‘Comment’ section to ‘Opinion’ to: ‘help readers distinguish our carefully selected and edited articles from the online “comments” below stories’.

As part of the changes, the FT has also decided to drop the introductory ‘Sir’ that traditionally started each letter to the editor, as it felt ‘old fashioned’ and should the editor one day be female it will be become inaccurate.

The FT has managed to get out ahead of an embarrassing gender story in future, something a grammar school in Guernsey tried to do this week when it scrapped ‘head boy’ and ‘head girl’ roles to establish gender neutral roles (chair and vice chair) but has ended up with two male student leaders.

4. Mind the Agenda Gap

Talking of gender imbalance (wouldn’t it be nice to not need to? Still, it’s not like it’s 2018), The Times has revealed an embarrassing statistic for MPs on Twitter this week. The paper says that 99% of MPs follow more men than women on Twitter. While 46% of worldwide Twitter users are female, every Cabinet and Shadow Cabinet member follows more men than women. Only five MPs follow more women than men: Jo Swinson, Lib Dem deputy leader, Jess Phillips (Lab), Susan Elan Jones (Lab), Ruth George (Lab) and Tracy Brabin (Lab).

One of the biggest imbalances is in business secretary Greg Clark’s following, which is 75% male and includes no female cabinet members. Clark [pictured] recently oversaw the publication of gender pay gap information in large companies.

Jo Swinson was disappointed but not surprised, she said: ‘One of the simplest things we can all do to tackle sexism and other bias is to make a conscious choice to follow, listen to and amplify the voices of women, people of colour and others whose perspectives are under-represented in public debate’.

5. Facebook tries to comply with the GDPR

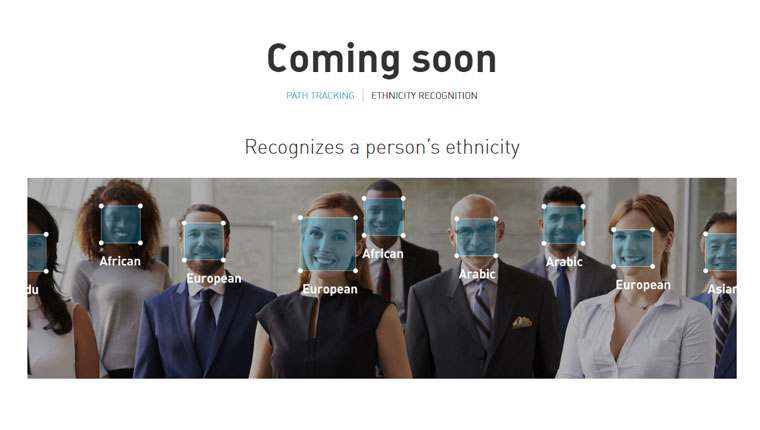

Facebook has started to seek explicit consent from users in compliance with the GDPR. Though it has possibly confused the GDPR and PECR, as it should probably already have permission from users to use their information. Part of its permission seeking is for ‘facial recognition’. Some people are suggesting that while Facebook is asking for consent, it is not making opting out easy, which is against the GDPR as opting out should be as easy as opting in, rather than clicking through two additional pages until you find the right section.

There’s another GDPR question around Facebook, published by the Guardian. The paper suggests that Facebook is moving its privacy controls from its Ireland office to the US, so it won’t have to comply with the GDPR outside the EU (something it has said it would do). However, the GDPR only applies to EU citizens (wherever they are in the world) and is not a regulation of where data is held, so it is hard to see what this change will actually do in relation to the GDPR.

We’ve answered some questions about the GDPR here, which might be able to help Facebook out.

Did we miss something? Let us know on Twitter @Vuelio.