Media Spotlight: Claire Wardle, First Draft

Using research about audience behaviour and social psychology, Claire Wardle pioneers new ways in which newsrooms can tackle issues associated with fake news and manipulated content. Currently leading strategy and research at First Draft, a non-profit dedicated to finding solutions to the challenges associated with trust and truth in the digital age, Claire is passionate about offering best practices for tackling the misinformation ecosystem. In this spotlight, Claire chats to us about how Donald Trump has ignited the public’s interest in fake news, clickbait headlines, the role that memes play in spreading misinformation, why she doesn’t think hyperpartisan sites like Breitbart are internet trolls, and why audiences need to develop a more critical eye when it comes to consuming news online.

What do you most like about being the lead strategy and research at First Draft? What are some of the challenges? The good thing is that the US election made people more aware of fake news. There is a culture of philanthropy in the US so I think this will help us to raise more money so we can create an organisation with proper levels of staff which will enable us to start thinking about this issue globally and ensure that we are supporting people working in this area. The challenge is, it’s a very noisy space right now. There are many people working to tackle this issue and trying to find solutions, so trying to coordinate those efforts is a big challenge right now.

Misleading content has always been an issue in journalism. So why in such a short space of time has it captured the public’s imagination? Misleading content has always been an issue. It’s captured the public’s imagination because of Trump, and fake news stories. It has been around for years but nobody had really been paying attention to it, but because of Trump and the surprise people felt about his election this interest was amplified. The post-mortem was wide, people were looking at many different things, and that’s why it caught the public’s attention.

For me, fake news has a very particular definition. It’s text based content that is %100 false, created solely for profit.

Clickbait headlines play a big part in the problem of misinformation? Is the internet to blame for this or the fact that people have short attention spans? It’s the commercial model that causes this. There is an infinite amount of white space on the internet. In order to make money, you have to get people to look at your material, there is so much competition, the way to do that is basically take advantage of people’s brain’s, and we are humans wired for gossip, we are wired for rumour, we are wired for simplicity, so when someone tells us ‘You’re never gonna guess what happened next’, people click, so it’s the financial model taking advantage of people’s brains. So it’s less about short attention spans and more about wanting to know something that somebody else doesn’t know.

During your talk at Newswired you mentioned satire, parody, and sophisticated networks connections as the main culprits of misinformation. Can you speak more about how this works? I wouldn’t say that satire and parody are the main culprits at all, they are part of the ecosystem, and if people were smart enough to not be fooled by them I wouldn’t have included them at all. The reason I do is because it goes to show our brains are so overwhelmed by information when you see something that supports your world view that is confirmation bias. When this happens you don’t fire up your usual critical thinking skills, so my point is that people are fooled by what should be pretty obvious like satirical articles or visuals show which are deliberately designed to manipulate our minds and that’s something we need to worry about.

When I talk about sophisticated networks that’s slightly different. In this instance, I’m talking about dissemination methods. I’d say those are the parts that people aren’t aware of. Most people couldn’t get their heads around the fact that people are being told what to write in a certain way, and being told to go into comments sections. I also don’t think people understand BOT networks that are being used to systematically attack certain high-level influencers, encircle certain users, and bombard them with certain information.

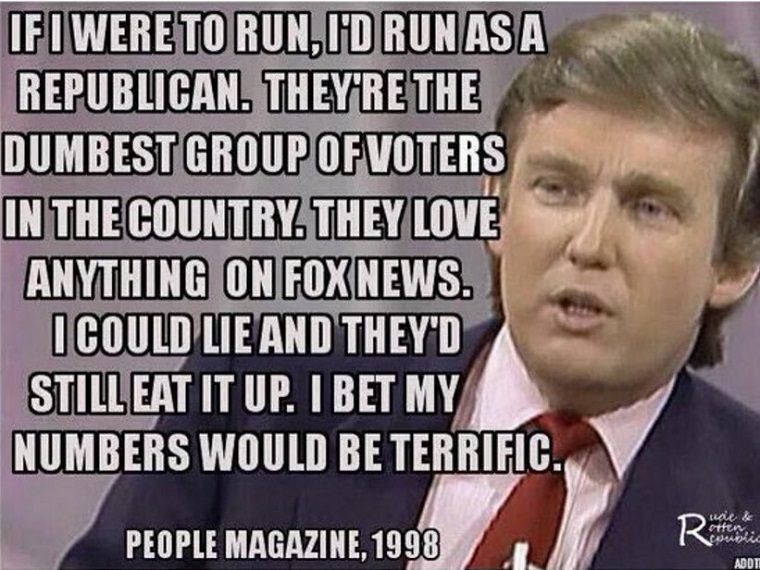

You also spoke about the power of visuals being used in a destructive way, like meme’s used to persuade people to think in a certain way. Can you speak on the role that memes, which up until recently were looked at as harmless fun but are now one of the most effective tools in spreading misinformation?

That goes back to the brain. We are wired to be less critical of visuals and again we are always looking for shortcuts because we are so overwhelmed, and then there’s this idea that visuals can’t lie. There’s always been this thing about visuals as the objective truth and so what that means is that we are less critical when in many times we should be more critical. It is easy to manipulate a photo, it’s easy to frame a photo to deliberately disregard the context of an event, so when I talk about memes I think they are an interesting hybrid part of this, which is our minds think ‘Oh this is a visual so I can trust this more’. The thing about memes is you can have a correct photo, you can have a correct quote, it’s the context that emerges when you combine those two things. Lots of memes are frivolous, they can sit in the satire box, they are designed to not cause harm but to make people laugh, but in this heightened, partisan environment, people aren’t necessarily being critical or seeing the purpose of these critical things or that they are being weaponised.

People do not think of memes as being anything more than harmless fun, and many times they are but we need to look at how they are being used and how they take advantage of people’s brains to stop their critical thinking skills.

What are your thoughts on conservative sites like Breitbart? Would you classify them as being trolls? Breitbart is part of a hyperpartisan site, they frame information in a certain way, they exaggerate, they use false context, their captions don’t necessarily fit the headlines, but they are not alone in doing this. My discomfort comes from their use of fear, it comes from articles that are consistently framing certain communities as being dangerous and firing flames about immigration, that’s my concern.

Their techniques are used across the spectrum, but they are very successful at it and they are good on social media. I wouldn’t call them trolls, I would say that they are coming from a particular position. They are using all of the techniques available to them to push out a message. We might not agree with the topics they cover but the methods they use are being used by all people at different sides of the spectrum.

You mentioned in a recent talk that we need to learn emotional scepticism? Can you talk more about this in regards to how we respond to information that might not be true? Our brains are not designed to equip us to do what we need to do right now, if you think about air pollution it’s a public health crisis. The amount of false information that is circulating is high, and we need to be very sceptical all the time. And if we have strong emotional reactions to content in that it makes us feel smug, it makes us feel angry, or it makes us cry, then we need to think something has happened here, something has been triggered in my brain, I’m going to have to work harder to ensure that this information is true. This is what I mean by emotional scepticism, we need to learn about how our brain functions, we need to learn the cues and the flags that when we respond in a certain way it is possible that our brain is being manipulated because that’s what’s happening. When we see that coming we should be far more critical.

During your talk you outlined potential solutions to ‘fake news’ such as news outlets working collaboratively with different partners to bring in different information, working together to verify information so newsrooms are not duplicating the efforts of other newsrooms, and bringing in audiences into this ecosystem. Can you explain why you see this as being the solution? We’ve just launched CrossCheck in France. We are trying to go into newsrooms and create a live laboratory. We don’t know the answers to this but it does seem insane that you would have 35 newsrooms all verifying the same information at the same time. This is not a case of journalists looking for new angles, trying to find a scoop, it’s about a piece of disinformation is circulating in the eco-system and it does not make sense for everyone to do the same work. It does make sense for people to take it in turns to do the work, and to say to everybody else look at the work that I did, do you agree with this process, therefore do you want to put your logo against this? We hope that by collaborating we’ll actually increase trust levels from the audience.

Will you be working on any exciting projects this year? The biggest project is CrossCheck, which is launching in France, and we plan to move this to other countries. I’m sure they’ll be elements that will work and others that won’t so it will be a continuous testing process. And that’s exciting!

Leave a Comment