AI and ethics

Artificial technology has been in the news recently, for all the wrong reasons. Google naively showed off the functionality of its new Duplex AI, while NtechLab has announced a new product to identify ethnicities (unfortunately not a joke).

Google Duplex

Google Duplex is a new AI assistant that can handle tasks over the phone. That’s right, Duplex is able to make phone calls and book appointments on your behalf. Sundar Pichai, CEO of Google, showed the assistant off at its I/O developer conference, including a recorded example of Duplex calling to book a haircut:

In the clip, Duplex is shown to be indistinguishable from a human on the phone. The crowd, unsurprisingly, loved it – even whooping when the AI sounds most human saying ‘Mmm-hmm’ to acknowledge a point made by the hairdresser. It’s not surprising, this is Google’s I/O developer conference – for these people, super advanced AI is a great achievement.

Unfortunately for Google, a number of people and news outlets have now raised concerns over not being able to tell the difference between people and computers.

What Google failed to mention is that the AI would identify itself, so the person on the other end of the phone would know it was chatting to a robot – but even that throws up questions and concerns.

What if the AI doesn’t understand a question it’s asked, or an accent? How many times is a vendor likely to repeat themselves or reword queries if they know they’re talking to a machine? And what does it say about society that people are now getting machines to book haircuts on their behalf?

Google suggests it can be used by businesses to automatically take bookings, though how many people want to call up to book a table at a restaurant and be handled by a machine?

There’s also concerns over the data Duplex gathers on individuals – for this to work you have to tell Google an awful lot about what you want booked, when, when you’re free, alternative times for the appointment, the details of what you’re booking and why. Under the GDPR, if this data is processed by Google then you have to be informed – imagine the conversation with your AI assistant if they have to explain all the ways the data will be used!

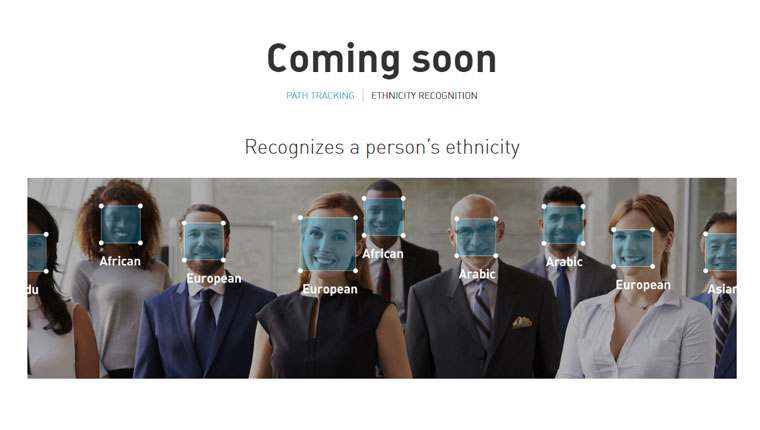

Recognising ethnicity

The other ethical AI story is even more concerning. Russian NtechLab is a group of experts in the field of deep learning and artificial intelligence; the website states they ‘like to invent algorithms which work in unconstrained real-life scenarios’. The only listed product so far is a facial recognition tool.

It’s the ‘coming soon’ section that raises concern, with a product called ‘ethnicity recognition’. There’s no further information, but the image suggests it will identify people’s ‘ethnicities’ based on their faces. It’s not clear why this would EVER be needed, nor how it could ever be accurate.

As Forbes points out, it’s amazing that these companies are able to create such tools, without seeing the ethical issues that are more obvious to those that don’t work in tech. Socialogist Zeynep Tufekci said: ‘Silicon Valley is ethically lost, rudderless and has not learned a thing’.

Artificial intelligence is not bad, it makes all of our lives easier every day and, as the CIPR’s ongoing #AIinPR study shows, it’s of great benefit to the PR and communications industry. When developing AI functionality, companies need to consider their responsibilities towards data subjects and clients. The GDPR comes into force on 25 May and the automation of data, for the benefit of AI, will be under more scrutiny than ever before. It’s hard to see how an ethnicity recognition tool will pass the new stringent regulation requirements.

As for Google, the whole company is built on AI and for the most part, people are not concerned. Where Duplex has fallen down is by being too real. We’re not in Blade Runner* – society just isn’t ready for a world where it’s impossible to distinguish between computers and people, fiction and reality. At least, not yet.

*Insert your own favourite popular 80s’ sci-fi film here.

Leave a Comment